Housekeeping Service

INFO

Datameer's housekeeping is a background service that improves processing by deleting obsolete data from HDFS, removing old entries from job history, and removing unsaved workbooks.

The housekeeping service is defined either by count, where data objects above a given number are marked for deletion (e.g. keep 10 data objects, when object 11 comes in, delete object 1), by time (e.g. at the end of every business day), or with a combination of both. Data entities that are set to be deleted are first marked for deletion. Data entity deletion is based on specific site retention policy and can be configured by the Datameer X administrator using the default.properties file.

A property that delays file deletion from HDFS for a period longer than the standard backup interval can be used to configure failover behavior. Similarly, a property that delays data deletion after a Datameer X upgrade can be used to assure that roll back is successful if it is needed.

Housekeeping Data Deletion

- job history after 28 days

- unsaved workbooks after 3 days

- data in status 'MARKED_FOR_DELETION' on the Hadoop cluster after 30 minutes

INFO: After the data status has been set as MARKED_FOR_DELETION, filesystem artifacts (e.g. job logs) are deleted in two steps. First, the database entry is deleted and a new database entry in the 'FilesystemArtifactToDelete'table is created with the path to the HDFS object. The entry has the column's state set to 'WAITING_FOR_DELETION'. Second, the housekeeping service collects all entries from the state 'WAITING_FOR_DELETION' of theFilesystemArtifactToDeletetable. The housekeeping service tries to delete these paths from the HDFS. If this succeeds, the entry is removed from the table.

- data entities from database, that are in status 'DELETED'

If a deletion fails, the entry's column value ist set to 'DELETION_FAILED'

Physical Data Deletion

INFO

The database table 'filesystem_artifact_to_delete' tracks physical (HDFS/ distributed storage) artifact deletion.

Example:

| ID | Owner | Status | Storage Path |

|---|---|---|---|

| 111 | 123 | 0 | hdfs://nameservice1/user/datameer/workbooks/1/1 |

| 222 | 456 | 1 | hdfs://nameservice1/user/datameer/jobhistory/100/101 |

| 333 | 789 | 2 | hdfs://nameservice1/user/datameer/jobhistory/100/201 |

The status column entries are:

- '0' stands for 'WAITING_FOR_DELETION': artifacts are scheduled for the deletion when the next Housekeeping Service runs

- '1' stands for 'DELETION_FAILED': artifacts with deletion from distributed storage failed during a Housekeeping Service run

- '2' stands for 'DATA_DELETION_DISABLED': artifacts are scheduled for deletion, but the distributed storage artifact deletion has been disabled via the flag 'housekeeping.data.deletion.disabled=true'

Housekeeping Does Not Delete

- data not marked for deletion (configurable for failover and rollback reasons)

- data in status 'MARKET_FOR_DELETION' that is used in a running job

- data referenced by an active workbook snapshot

- data is copied instead of being referenced, when a sheet is linked in a different workbook and is marked as kept

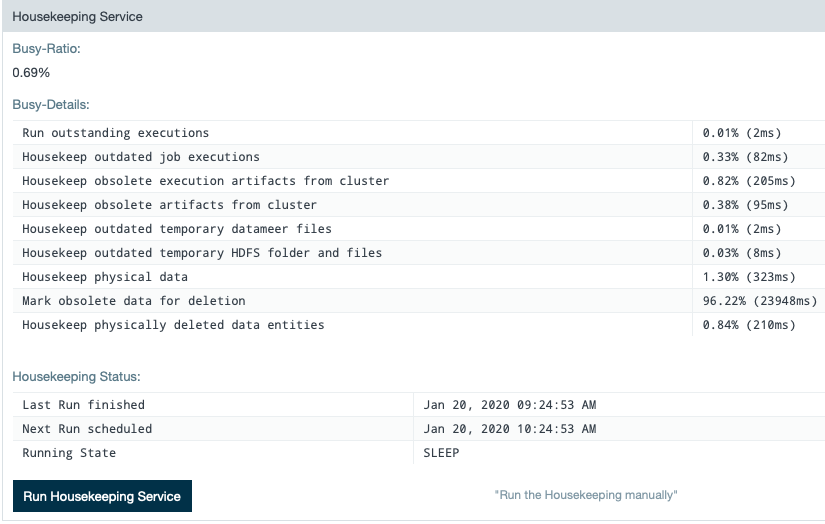

Housekeeping Monitoring

INFO

Find Datameer's 'Housekeeping Monitor' under /<host:port>/dev/housekeeper.

Here you get information about:

- busy-ratio

- busy-details

- Housekeeping status

Configuring Housekeeping

The housekeeping configuration settings can be found in the /wiki/spaces/DASSB70/pages/33036121056 file in Datameer.

################################################################################################ ## Housekeeping configuration ################################################################################################ housekeeping.enabled=true # Disable data deletion from hdfs, when doing a housekeeping run the files in database will be deleted but not in hdfs. # Then the files will be logged into the database table 'filesystem_artifact_to_delete' and to conductor.log housekeeping.data.deletion.disabled=false # Define the maximum number of days job executions are saved in the job history, after a job has been completed. housekeeping.execution.max-age=28d # Maximum number of out-dated executions that should be deleted per housekeeping run housekeeping.run.delete.outdated-job-executions=50 # To allow for better failover due to a crashed database, deleted data should be kept longer than the configured # frequency of database backups. housekeeping.keep-deleted-data=2h # Don't delete any data on HDFS for this period of time after an upgrade. This allows for a safe rollback to # a previous version housekeeping.keep-deleted-data-after-upgrade=2d # Maximum number of out-dated data objects that should be marked for deletion per housekeeping run housekeeping.run.mark-for-deletion.outdated-data-objects=200 # Maximum number of out-dated data objects that should be deleted per housekeeping run housekeeping.run.delete.outdated-data-objects=50 # Maximum number out-dated data artifacts that should be deleted from HDFS per housekeeping run housekeeping.run.delete.outdated-data-artifacts=100 # Maximum number out-dated file artifacts that should be deleted from HDFS per housekeeping run housekeeping.run.delete.outdated-file-artifacts=10000 # Define the maximum number of days unsaved workbooks are stored in the database. housekeeping.temporary-files.max-age=3d # Minimum time to keep files in temporary folder after last access. housekeeping.temporary-folder-files.max-age=30d # Maximum number of out-dated temporary conductor files that should be deleted per housekeeping run housekeeping.run.delete.outdated-temporary-files=50 # Maximum number of attempts for each task per housekeeping run housekeeping.run.task-attempts-per-run=50 # The time that the housekeeping service falls asleep after each cycle housekeeping.sleep-time=1h ################################################################################################

The default configuration works well for most enterprise environments and is set to control the volume of artifacts the housekeeping service touches during one transaction. The housekeeping service itself runs as long as there are objects to delete and performs multiple transactions as necessary. By default the service strives for the largest possible delete count. Unless you would like to keep artifacts longer or would like to change the amount of transactions per run, there is no need to change the default configuration.

Adjusting for failover protection

You can delay deleting files from HDFS for a period longer than the backup interval by adding this property in conf/live.properties:

# To allow for better failover due to a crashed database, deleted data should be kept longer than the configured # frequency of database backups. housekeeping.keep-deleted-data=2h

For more information see Configuring a Server for Datameer X Failover.

Adjusting for roll back

You can delay deleting files from HDFS following a Datameer X product upgrade by adding this property in conf/live.properties:

# Don't delete any data on HDFS for this period of time after an upgrade. This allows for a safe rollback to # a previous version housekeeping.keep-deleted-data-after-upgrade=2d

For more information see /wiki/spaces/DASSB70/pages/33036120776.

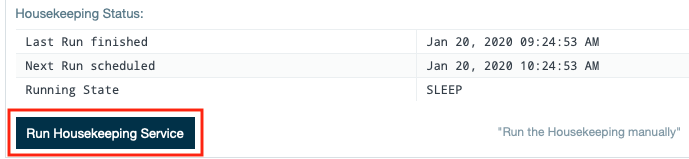

Run Housekeeping Manually

INFO

To run the 'Housekeeping Service' manually click on the "Run Housekeeping Service" button at the bottom of the page.

Housekeeping Service Log Files

INFO

Housekeeping service information is stored separately from the conductor.log. The housekeeping log files can be found in <Datameer>/logs/housekeeping.log*.

The default configuration creates a housekeeping services log up to a file size of 1MB. After 1MB a new log file is created, up to 10 times for a total of 11 housekeeping files. After that, the next log file replaces the oldest file, in a repeating cycle. All existing files in <Datameer>/logs/housekeeping.log* should be reviewed for a complete understanding of housekeeping status. See /wiki/spaces/DASSB70/pages/33036120989 for more information and how to change the default behavior of the file appender.

The log contains the following entries:

Service run start

[system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:155) - Starting housekeeping

...

Example: Service run

... [system] INFO [<timestamp>] [HousekeepingService thread-1] (JobExecutionService.java:461) - Deleted 45 executions: <id>, <id>, ... , <id> ... [system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:410) - Deleting artifact temp/job-<jobExecutionID>. [system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:413) - Deleting <fs>:///<path>/job-<jobExecutionID> ...

Info messages

Artifacts that the Housekeeping service hasn't yet deleted are logged in the following way:

... [system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:447) - Not deleting WorkbookData[id=<configID>,status=MARKED_FOR_DELETION], because it is at least referenced in the kept sheet '<sheetName>' of workbook '/<DatameerPath>/<workbookName>.wbk'. [system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:447) - Not deleting DataSourceData[id=<ID>,status=MARKED_FOR_DELETION], because it is at least referenced in the kept sheet '<sheetName>' of workbook '/<DatameerPath>/<workbookName>.wbk'. ...

Based on this information, you might review the data retention settings of referenced workbooks and worksheets.

Example: Clean up

...

[system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:358) - Setting data status of (WorkbookData:<dataID>) to DELETED

[system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:362) - Deleting <fs>:/<path>/workbooks/<configID>/<jobID>

[system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:358) - Setting data status of (DataSourceData:<dataID>) to DELETED

[system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:362) - Deleting <fs>:/<path>/importlinks/<configID>/<jobID>

...

[system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:109) - Next check for physical data to delete will start at offset 109.

[system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:499) - Deleted data WorkbookData[id=<dataID>,status=DELETED] created by DapJobExecution{id=<jobID>, type=NORMAL, status=COMPLETED}

[system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:499) - Deleted data DataSourceData[id=<dataID>,status=DELETED] created by DapJobExecution{id=<jobID>, type=NORMAL, status=COMPLETED}

...

Example: Summary after a successful run

... [system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:116) - Next check for physical data to delete will start at the beginning again. [system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:225) - Housekeep physically deleted data entities: 1.04% (62ms) [system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:225) - Housekeep outdated job executions : 49.92% (2986ms) [system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:225) - Housekeep obsolete artifacts from cluster : 0.69% (41ms) [system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:225) - Housekeep outdated temporary datameer files: 0.02% (1ms) [system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:225) - Housekeep outdated temporary HDFS folder and files: 0.20% (12ms) [system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:225) - Housekeep physical data : 48.01% (2872ms) [system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:228) - Business: 0.2% [system] INFO [<timestamp>] [HousekeepingService thread-1] (HousekeepingService.java:205) - Housekeeping done. took 5 sec