Exporting to HiveServer1 and HiveServer2

Export data to a HiveServer1 or HiveServer2 database.

HiveServer1 is being deprecated and will be removed as a connector after Datameer v7.5.

Configuring Hive as a Connection

A HiveServer1 or HiveServer2 connection with Datameer must be created before exporting data (see Hive or HiveServer2 connection documentation).

As of Datameer 7.4.x, export behavior and validation for partitioned and unpartitioned tables is the same. Incorrect mapping will result in a failed export, and invalid records will be dropped from the export results. However, for unpartitioned tables with mode RECREATE, the original table definition is used to recreate the table and only columns with name and type are replaced by export sheet definition.

Note that it is possible to disable value validation, should you choose, by setting the custom Hadoop property hive.server2.export.record.validation=false.

Configuring Export Jobs with a Hive Connection

To export a worksheet to a Hive connector:

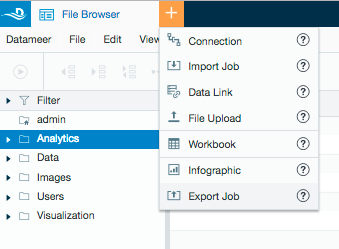

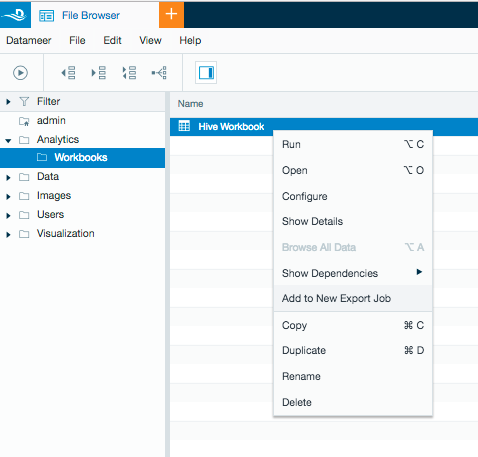

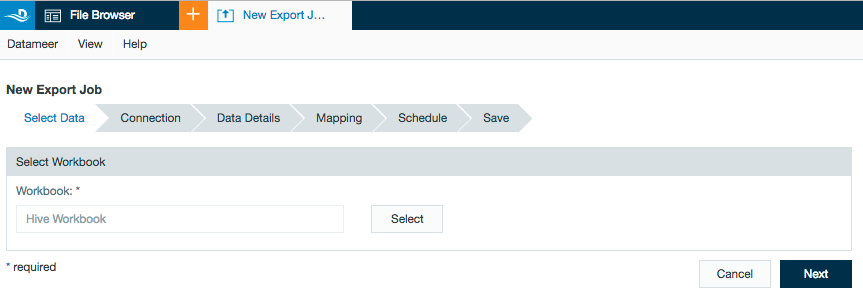

- Select the + (plus) at the top left of the File Browser and select Export Job or right-click on the workbook to export from and select New Export Job.

Select the workbook to export from in the browser.

As of Datameer 7.5

Datameer 7.5 has implemented automatic sorting of data via partitioned Hive columns before an export job runs.

For Hive exports in Datameer prior to version 7.5, we recommend that workbook be sorted via partitioned columns for the best performance.

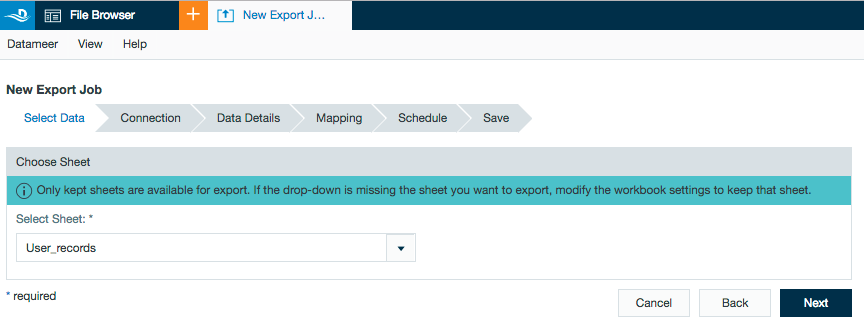

Select the worksheet to export from the drop down menu.

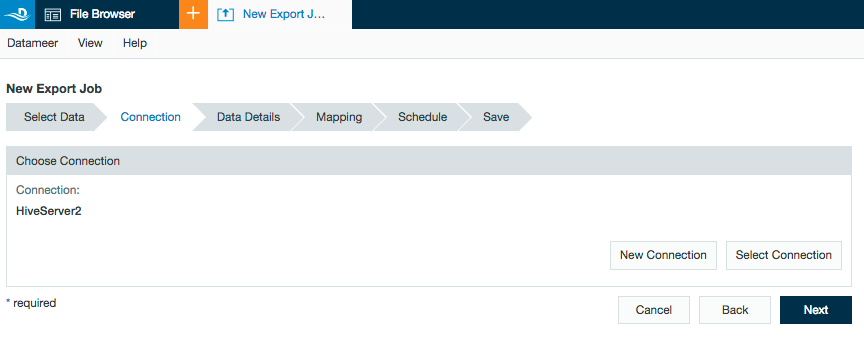

- Select the Hive or HiveServer2 connector.

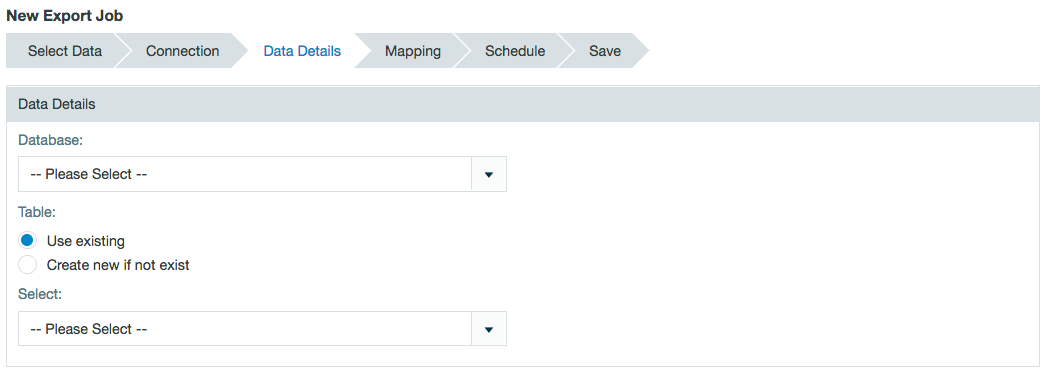

- Select a database on the Hive server from the drop down menu.

If a database filter has been added to the Hive connection settings, that filter is respected when displaying databases in the drop down list.

To export to an existing table on the Hive server, select the radio button Use existing.

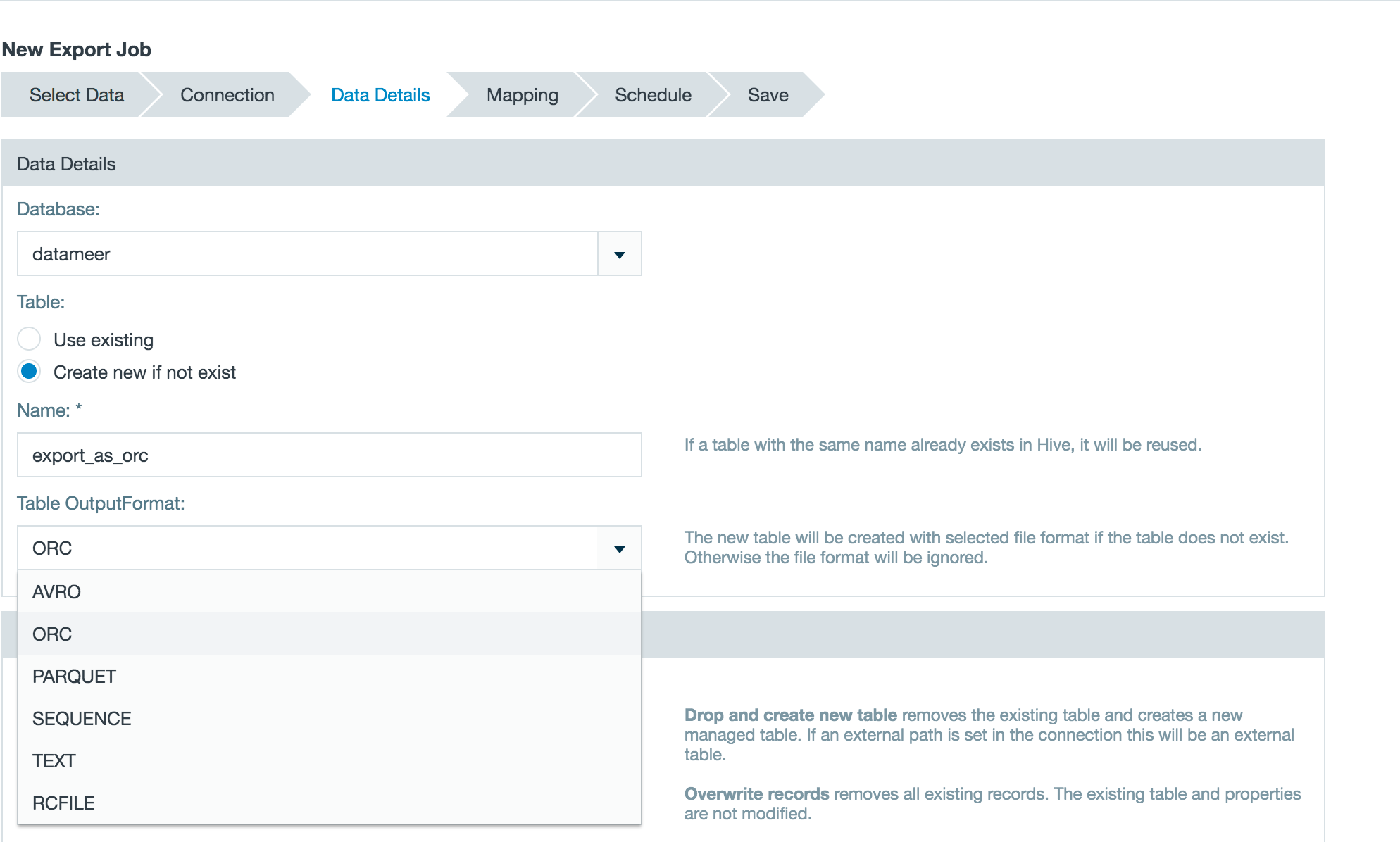

To export to a new table on a database, select the radio button Create new if not exist and enter a new table name.

Select the expected output format from the drop-down. INFO: Selecting the file format will be ignored for already existing tables.

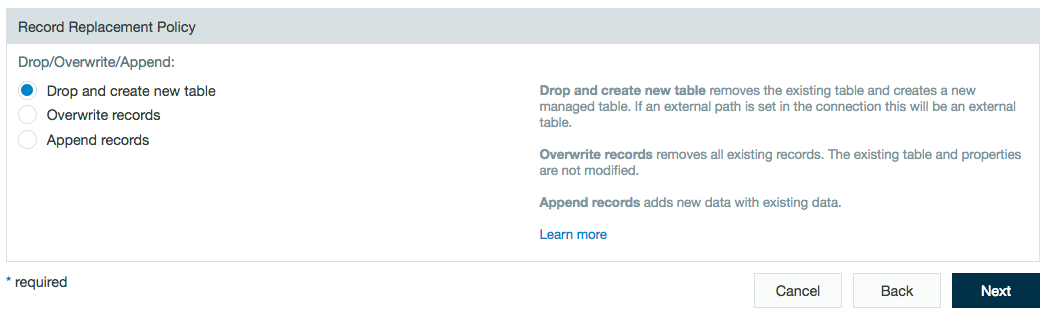

Select a record replacement policy. Learn more about database record replacement.- Drop and create new table - The existing table is dropped from the database. A new table is created with the schema defined from the worksheet being exported. If the initial table was an external one, Datameer will drop it and create a new external table. This replacement policy is only available for unpartitioned tables and is written as a TEXTFILE (plain text).

- Overwrite records - All records from the existing table are deleted and the new records are written to the table. No table properties are modified and the data is written into the original file type. (e.g., ORC)

- Append records - The records append to the existing table.

Exporting: Datameer Versions Through 7.4.x ("Classic")

Exporting into partitioned tables

- Supports exporting to an existing table on the Hive server.

- If exporting to a Hive table in a Sentry secured HDFS, the table being exported to requires read, write, and execute permissions.

Datameer exports support the Hive data types listed below in the table.

Datameer type Hive type void boolean tinyint smallint int bigint float double decimal string varchar char timestamp date binary array< data_type > STRING

BOOLEAN

FLOAT

INTEGER

DATE

BIG_DECIMAL

BIG_INTEGER

LIST

The export is written into the existing Hive output format. (e.g., ORC, Parquet, …)

Users have run into Null Pointer Exceptions when exporting into a parquet table. The root cause is a bug in the Hive implementation that uses a default codec for compression. You can solve this issue by configuring the codec: (e.g.,

org.apache.hadoop.io.compress.DefaultCodec)mapreduce.output.fileoutputformat.compress.codec=org.apache.hadoop.io.compress.DefaultCodec

Limitations on exporting to existing partitioned tables:

- Unable to write a list inside another list.

Unable to write the Hive data types "void" and "binary".

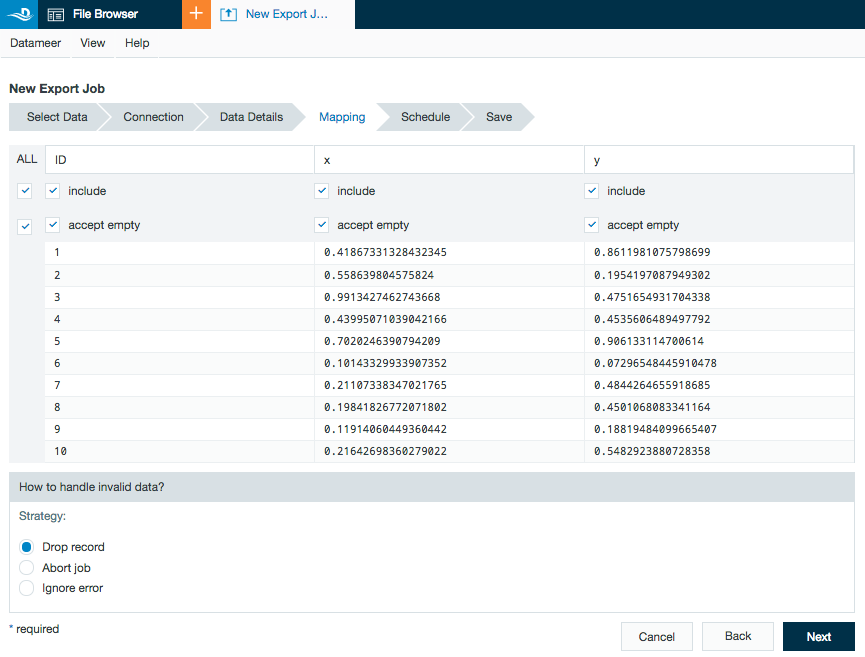

- If a record being written to the Hive server doesn't fit correctly into the Hive data type, it is dropped. The response to invalid data can be configured on the Mapping tab under "How to handle invalid data?" (see Step 6).

- This feature has been tested on a Sentry secured environment with Cloudera but has not been tested in a Ranger secured environment with Hortonworks.

Exporting into unpartitioned tables

For unpartitioned tables with mode RECREATE, the original table definition is used to recreate the table - only columns with name and type are replaced by the export sheet definition.

- Supports both exporting to a new table or into an existing table on the Hive server.

- If exporting to a Hive table in a Sentry secured HDFS, the table being exported to requires read, write, and execute permissions.

- Datameer exports support a limited number of Hive data types (see the tables below).

- The list of supported types is related to the data field mapping mode that can be configured in the Hive plug-in.

Datameer classic

Datameer type In Hive (Datameer classic) boolean bigint double string array< data_type > STRING

array<string>

array<string>BOOLEAN

array<boolean>

array<boolean>FLOAT

array<double>

array<double>INTEGER

array<bigint>

array<bigint>DATE

YYYY-mm-dd hh:MM:ss array<string>

array<string>BIG_DECIMAL

array<string>

array<string>BIG_INTEGER

array<string>

array<string>LIST

Hive specific

Datameer type In Hive (Hive specific) boolean bigint double decimal string date timestamp array< data_type > STRING

array<string>

array<string>BOOLEAN

array<boolean>

array<boolean>FLOAT

array<double>

array<double>INTEGER

array<bigint>

array<bigint>DATE

array<date> or array<timestamp>

array<date> or array<timestamp>BIG_DECIMAL

array<decimal>

array<decimal>BIG_INTEGER

array<string>

array<string>LIST

- The list of supported types is related to the data field mapping mode that can be configured in the Hive plug-in.

- The export is written in the output format TEXTFILE (plain text).

- Preview how the data is being mapped to the Hive table and select how to handle invalid data.

Requirements for exporting:

- A minimum of one column must be included.

- The column names must be unique.

Requirements for exporting into an existing partitioned table:- Column names in Datameer must match the column names in the Hive table (case sensitivity applies).

- Partitioned columns must match to the existing partition of the Hive table.

- The Datameer column data types must match the Hive column data types.

- The number of included columns from the Datameer worksheet in the export must match the number of columns in the Hive table.

Requirements for exporting into an existing unpartitioned table:- Column names in Datameer must match the column names in the Hive table. (Case insensitive)

- The column order in the Datameer export must match the column order in the Hive table.

- The Datameer column data types must match the Hive column data types.

- The number of included columns from the Datameer worksheet in the export must match the number of columns in the Hive table.

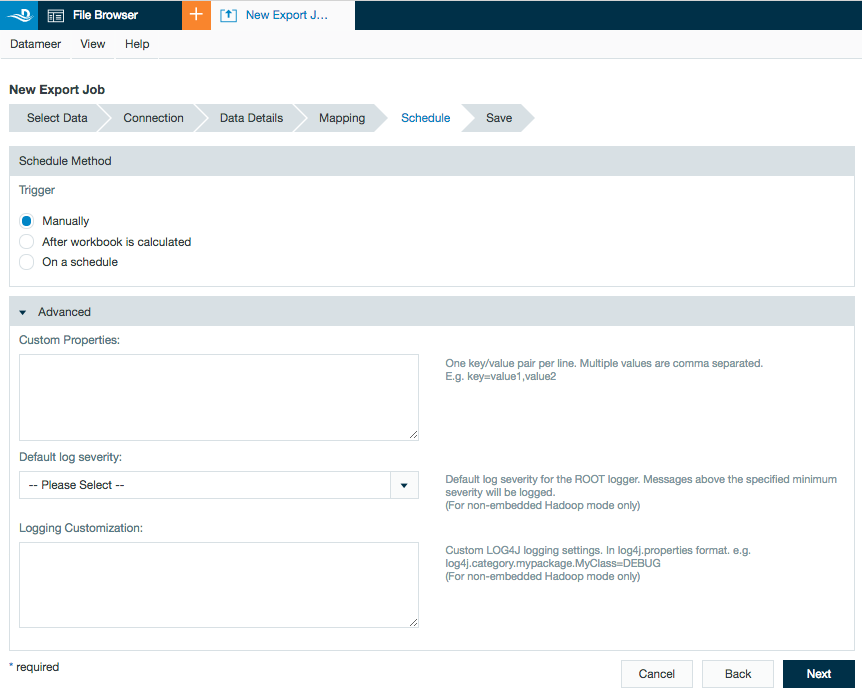

- Select when to run the export job. This can be done manually by a user, run each time the workbook in Datameer is calculated, or at specifically scheduled times.

Custom properties can be added in the advanced section as well as logging specifications.

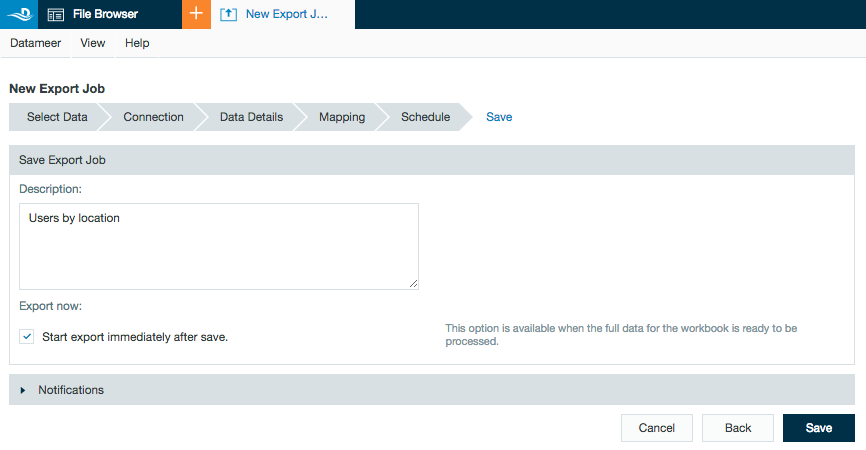

- Enter a description and click Save.

Exporting: Datameer Versions 7.5+

Implicit datatype mapping

With Datameer 7.5, direct conversion to Hive values - termed "implicit mapping" - is implemented. The mapping table below describes how Datameer creates table schema when a new one must be created, and how a Datameer value is validated and transformed into a Hive value type. See Hive documentation Allowed Implicit Conversions for further details.

For exports into an existing table, type mapping validation is performed to make sure records match the expected table column structure.

Datameer Value Type | Hive equivalent | boolean | tinyint | smallint | int | bigint | float | double | decimal | string | varchar | timestamp | date |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| STRING | string | ||||||||||||

| BOOLEAN | boolean | ||||||||||||

FLOAT | double | ||||||||||||

| INTEGER | bigint | ||||||||||||

| DATE | timestamp | ||||||||||||

| BIG_DECIMAL | - | ||||||||||||

| BIG_INTEGER | - | ||||||||||||

| List<type> | array<type> | (4) | (4) | (4) | (4) | (4) | (4) | (4) | (4) | (4) | (4) | (4) | (4) |

(♦) The default transformation for a newly created table - describes which Hive type Datameer uses when creating a new table.

(1) Transformation of Date to String by given date pattern, otherwise the default Hive date pattern (yyyy-MM-dd HH:mm:ss) will be used to format.

(2) Datameer BigDecimal has an unlimited precision/scale; this conversion is limited to Hive's decimal with maximum precision of 38 and scale of 38.

(3) Datameers BigInteger has an unlimited precision, this conversion is limited to hive's decimal with max precision 38 and scale 0

(4) Conversion of Datameer List's to Hive array uses the same logic to convert any underlying Datameer value type.

Newly Created Tables

There is a behavior change with Datameer 7.5 when a new table is created in the export wizard:

Datameer 7.4.x & earlier | Datameer 7.5+ Hive implicit | |

|---|---|---|

| BigDecimal | String | Decimal |

| Date | String | timestamp |

If you need to use the 7.4 Classic mode, you can set the BigDecimal or Date column to String in the workbook, which will be exported as String when using the Hive implicit (7.5+) mode.