Smart Execution

Datameer's Smart Execution™ technology dynamically chooses the best execution engine for your Datameer job depending on the job configuration and data volume. This feature also uses a combination of multiple execution engines for separate tasks of a Datameer job by logically allocating mixed workloads according to available resources for maximum processing efficiency.

The applications of Datameer's Smart Execution™ are valuable with the emergence of new computational frameworks joining the Hadoop ecosystem. Data sets come in many different sizes and some computing frameworks that work better for larger data sizes are not as efficient when working with smaller amounts of data. Smart Execution™ adapts the frameworks that perform best for the size of the data set.

Execution Frameworks

Datameer default execution framework

- Optimized MapReduce (Tez)

Datameer execution frameworks with Smart Execution™

- Optimized MapReduce. The Apache Tez framework which manages resources across multiple clusters. This frameworks optimizes resource utilization at runtime, and enables dynamic physical data flow decisions. This framework is optimal for large data sets.

SmallJob Execution. A framework that runs job tasks on a single node of the Hadoop cluster. This framework minimizes the amount of resources needed so smaller tasks are completed faster and more efficiently.

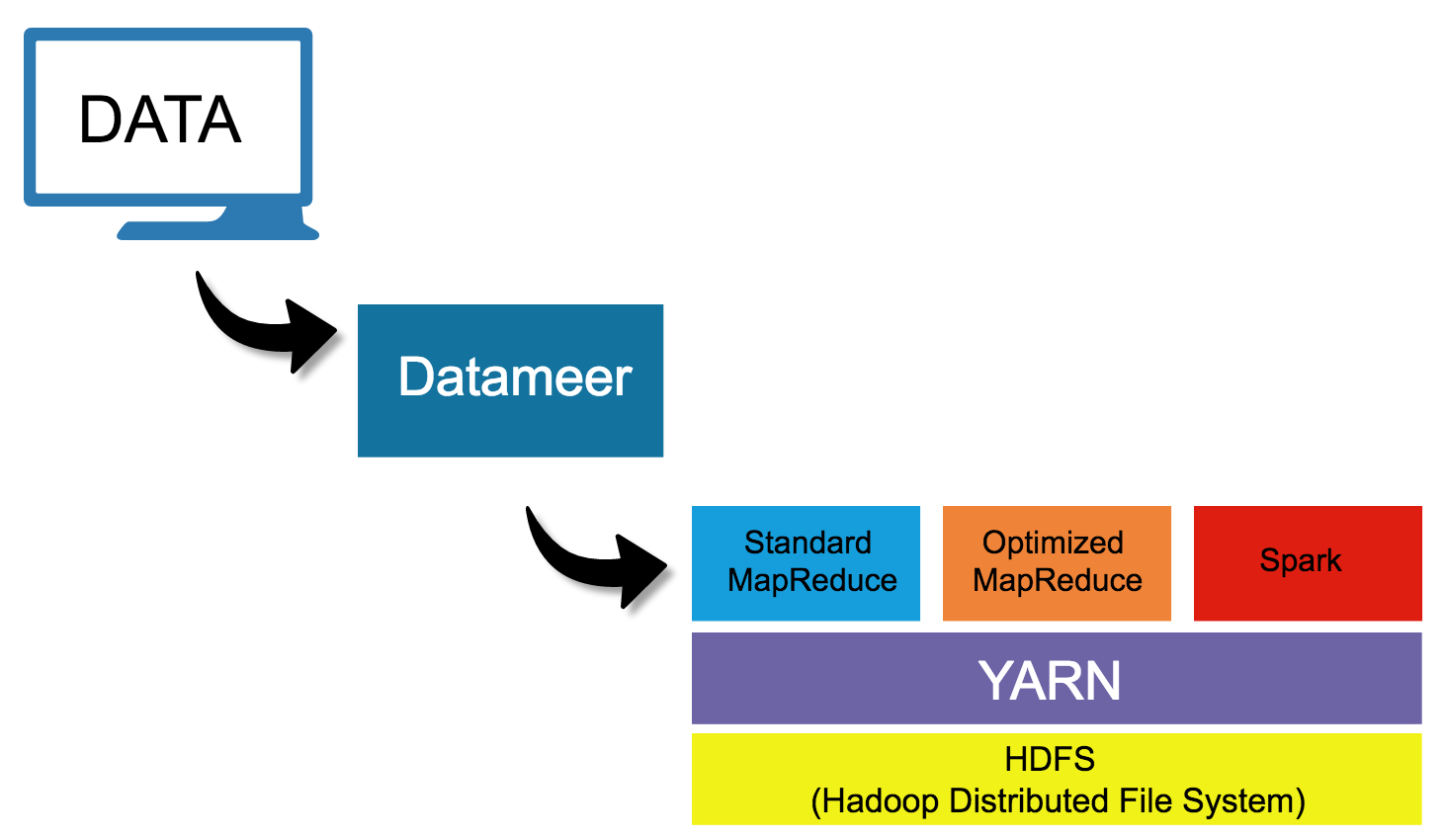

Data Flow Using Datameer's Smart Execution™

Datameer's goal is making data analysis simple whether you are working with 100 petabytes or just a few kilobytes. Smart Execution™ is a transparent process that runs behind the scenes to make the computation step faster and more efficient. After a job is started (import jobs, export jobs, file uploads, workbooks), Datameer selects the best computational framework based on the size of your data. The data then runs though YARN which is the resource manager for all the computational engines. Finally, the data is stored using Hadoop Distributed File System (HDFS).

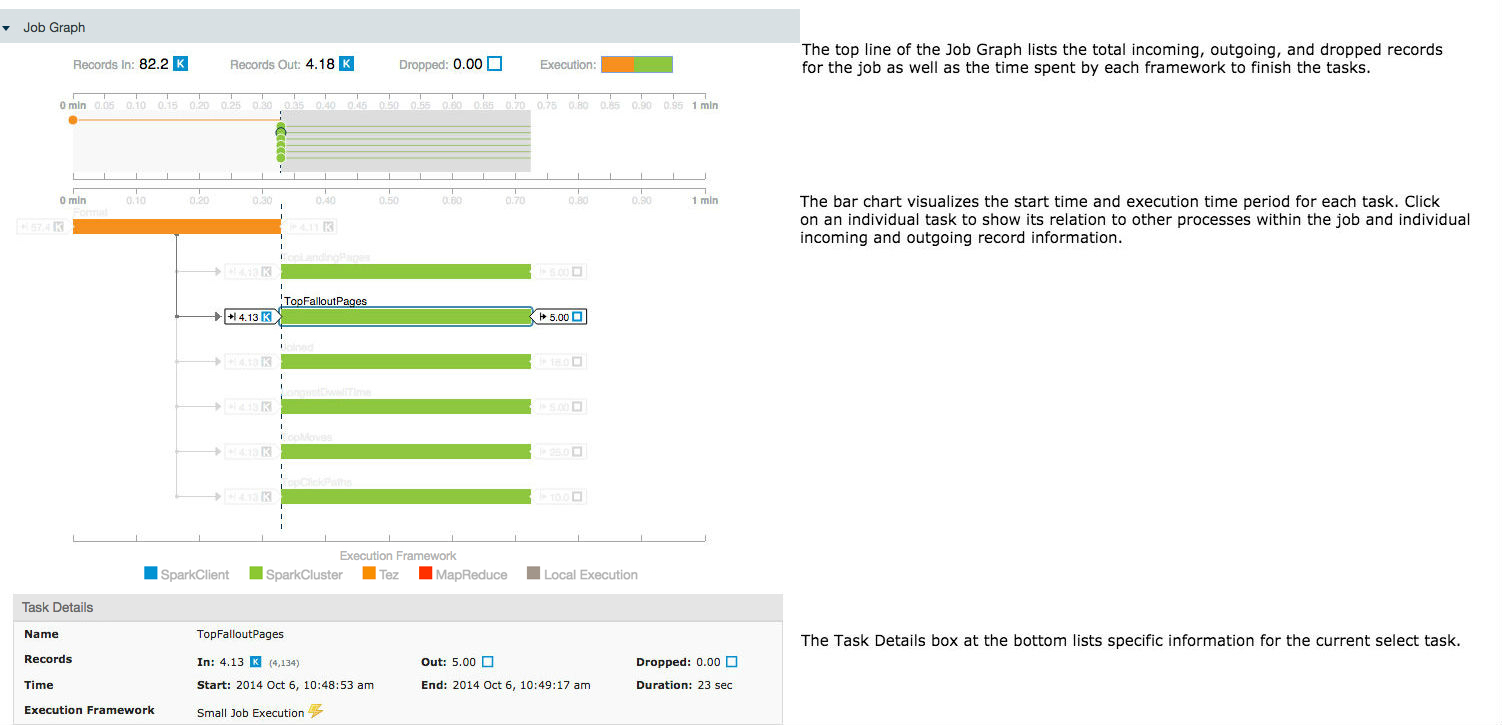

Job Graph

The Job Graph is a visualization to show details of the computational framework used for each task of a job.

To view the Job Graph:

- Select the job (Import/Upload/Export/Workbook) and click on Details.

- Click the Job ID.

- Open the Job Graph.