Configuring a Network Proxy

Setup

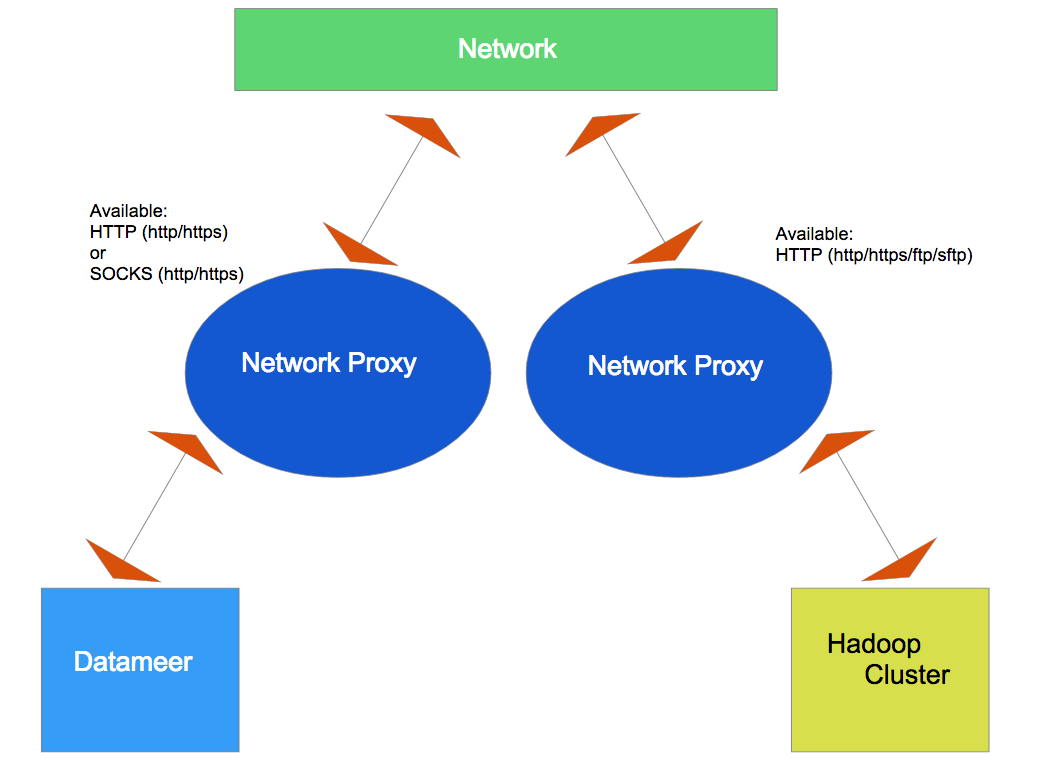

Datameer can be configured to use network proxy servers for both Datameer and the Hadoop Cluster for outgoing http(s) connections. (e.g., for the web service connectors and import jobs.)

You can configure either a HTTP or a SOCKS proxy for all outgoing HTTP and HTTPS traffic to the remote system.

Additionally, you can configure the Hadoop Cluster a HTTP proxy for outgoing FTP and SFTP traffic.

- Datameer - HTTP (http/https) or SOCKS (http/https)

- Hadoop Cluster - HTTP (http/https/ftp/sftp) or SOCKS (for outgoing connection with http/https)

This configuration method gives you more flexibility to specify exactly what each proxy is responsible for on the system network.

Setup can be made in "das-common.properties", like this:

#proxy settings for outgoing connection from Datameer web application das.network.proxy.type=[SOCKS|HTTP] das.network.proxy.host=<host ip> das.network.proxy.port=<port> das.network.proxy.username=<username> das.network.proxy.password=<password> das.network.proxy.blacklist=<url pattern with '|' separated> #proxy settings for outgoing connection from cluster -> triggered by datameer job to fetch data from web services over the protocols http, https das.clusterjob.network.proxy.type=[SOCKS|HTTP] das.clusterjob.network.proxy.host=<host ip> das.clusterjob.network.proxy.port=<port> das.clusterjob.network.proxy.username=<username> das.clusterjob.network.proxy.password=<password> das.clusterjob.network.proxy.blacklist=<url pattern with '|' separated> #automatically find and add all data nodes in the cluster to the blacklist das.clusterjob.network.proxy.blacklist.datanodes.autosetup=true #proxy settings for outgoing connection from cluster -> triggered by datameer job to fetch data from web services over the protocol ftp das.clusterjob.network.proxy.ftp.type=[HTTP] das.clusterjob.network.proxy.ftp.host=<host ip> das.clusterjob.network.proxy.ftp.port=<port> das.clusterjob.network.proxy.ftp.username=<username> das.clusterjob.network.proxy.ftp.password=<password> #proxy settings for outgoing connection from cluster -> triggered by datameer job to fetch data from web services over the protocol sftp das.clusterjob.network.proxy.sftp.type=[HTTP] das.clusterjob.network.proxy.sftp.host=<host ip> das.clusterjob.network.proxy.sftp.port=<port> das.clusterjob.network.proxy.sftp.username=<username> das.clusterjob.network.proxy.sftp.password=<password> #proxy setting for Datameer web application. The configuration impacting traffic of S3, S3 Native, Snowflake and Redshift connectors. das.network.proxy.s3.protocol=[HTTP] das.network.proxy.s3.host=<host ip> das.network.proxy.s3.port=<port> das.network.proxy.s3.username=<username> das.network.proxy.s3.password=<password> #proxy settings for outgoing connection from cluster -> triggered by Datameer job to fetch data from web services over the protocol s3. # This is impacting the traffic S3, S3 native, Snowflake and Redshift das.clusterjob.network.proxy.s3.type=[HTTP] das.clusterjob.network.proxy.s3.host=<host ip> das.clusterjob.network.proxy.s3.port=<port> das.clusterjob.network.proxy.s3.username=<username> das.clusterjob.network.proxy.s3.password=<password>

Troubleshooting

For troubleshooting configuration, you can configure verbose logging in conf/log4j-production.properties

log4j.category.datameer.dap.common.util.network=DEBUG

The conductor.log and Hadoop job logs shows information such as this:

DEBUG [2013-10-01 12:14:53] (NetworkProxySelector.java:38) - Using proxy [SOCKS @ localhost/127.0.0.1:3128] for uri https://www.googleapis.com/adsense/v1.2/accounts. DEBUG [2013-10-01 12:14:54] (NetworkProxyAuthenticator.java:32) - Authentication requested for localhost/SOCKS5/SERVER