INFO

This page describes only the minimal set of steps needed for Datameer X to operate properly in your Hadoop environment. For a more complete guide to Hadoop cluster design, configuration, and tuning, see Hadoop Cluster Configuration Tips or the additional resources section below.

User Identity

Your Hadoop cluster is accessed by Datameer X as a particular Hadoop user. By default, the user is identified as the UNIX user who launched the Datameer X application (equivalent to the UNIX command 'whoami'). To ensure this works properly, you should create a user of the same name within Hadoop's HDFS for Datameer X to use exclusively for scheduling and configuration. This ensures the proper permissions are set, and that this user is recognized whenever the Datameer X application interacts with your Hadoop cluster.

The username used to launch the Datameer X application can be configured in <Datameer X folder>/etc/das-env.sh

Permissions in HDFS

When running Datameer X with an on-premise Hadoop cluster (called "distributed mode"), you need to define an area (folder) within HDFS for Datameer X to store its private data. The Hadoop user created for Datameer X should have read/write access to this folder.

Hadoop permissions can be set with the following commands. Note that permission checking can be enabled/disabled globally for HDFS. See Configuring Datameer X in a Shared Hadoop Cluster for more information.

hadoop -fs

[-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...]

[-chown [-R] [OWNER][:[GROUP]] PATH...]

[-chgrp [-R] GROUP PATH...]

If you experience a problem with the permissions of the staging directory when submitting jobs other then with the superuser. There are two solutions for this:

- Configure

mapreduce.jobtracker.staging.root.dirto/userin themapred-site.xmlof the cluster. - Or, change the permissions of the

hadoop.tmp.dir(usually/tmp) inside the hdfs to 777.

See the Hadoop documentation for additional information.

Local UNIX Permissions

Various components of your Hadoop and Datameer X environment (data nodes, task trackers, JDBC drivers, the Datameer X application server) use a folder for temporary storage or "scratch" space. Additionally, HDFS files are sometimes stored in this location by default (see the Hadooop documentation).

Datameer X searches internally for the possible locations (folder/directory) that have the most free space in the local filesystem to write temporary files.

Possible options:

- mapred.local.dir (Property is used in old MR API)

- mapreduce.cluster.local.dir (Property for new MR API)

- tez.runtime.framework.local.dirs (Property for TEZ mode)

- yarn.nodemanager.local-dirs (Property for Yarn)

- Java system temp folder

For each machine (the Datameer X server, Hadoop master and slaves), you must ensure that the local UNIX user running these processes (often "hadoop" or "datameer", depending on the component) has read/write permissions for these folders, and of course to /tmp (commonly used by some components). Different components (e.g. Hive) can use different, configurable locations for scratch space. Check your configuration files for details.

In particular, Datameer X makes use of the directory mapred.local.dir defined in your Hadoop configuration. Check that Datameer X has write access to this folder, both on the Datameer X server and cluster nodes. If not properly configured, you might see cryptic exceptions like the one reported here: https://issues.apache.org/jira/browse/MAPREDUCE-635

Make sure the Datameer X application is consistently run by the same user, so that certain log files and other temporary data aren't written as root and then locked when Datameer X is subsequently started by another user. This can lead to inconsistent states which are difficult to troubleshoot (without access to the log files).

Network Connectivity

Hadoop Clusters are often secured behind firewalls, which might or might not be configured to allow Hadoop direct access to all data sources. The systems containing data to be analyzed, including web servers, databases, data warehouses, hosted applications, message queues, mobile devices, etc. are often in remote locations and accessible through various specific TCP ports. Normally, Datameer X is installed in the same LAN as Hadoop, and therefore suffers from the same connectivity issues, which can prevent you from using Datameer. Conversely, if Datameer X is located further away from Hadoop, it might have connectivity to data but not the Hadoop NameNode and Job Tracker, for the same reasons. Most of these issues aren't anticipated early in the life cycle of a Hadoop project, and thus firewalls need to be reconfigured. Finally, the speed and latency of the network link between Datameer X Hadoop, and your data can severely affect system performance and the end user experience. For these reasons, it's important to thoroughly review all network connectivity requirements up front.

Before you start using Datameer X check that the following network connectivity is available on the specified ports:

- Datameer X to Hadoop NameNode client port: (normally 8020, 9000 or 54310)

- Datameer X to Hadoop JobTracker client port: (normally 8021, 9001 or 54311)

- Datameer X to Hadoop DataNodes (normally ports 50010 and 50020)

- (Recommended): Datameer X to Hadoop Administration consoles (normally 50030 and 50070)

Depending on your configuration, the following connectivity might also be necessary:

- Importing/exporting via SFTP:

- Datameer X to source (normally port 22)

- Hadoop slaves to source (normally port 22)

- Importing/linking/exporting to/from an RDBMS:

- Datameer X to RDBMS via JDBC (various ports, see your DB documentation)

- Hadoop slaves to RDBMS via JDBC (various ports, see your DB documentation)

- Connecting to Hive:

- Hive Metastore (normally port 10000)

- Location of external Hive tables, if applicable (e.g. S3 tables via port 443)

- Connecting to HBase:

- Zookeeper client port (normally 2181)

- HBase master (normally 60010)

Installed Compression Libraries

For standard Hadoop compression algorithms, you can choose the algorithm Datameer X should use. However, if your Hadoop cluster is using a non-standard compression algorithm such as LZO, you need to install these libraries onto the Datameer X machine. This is necessary so that Datameer X can read the files it writes to HDFS, and decompress files residing on HDFS which you wish to import. Libraries which utilize native compression require both a Java (JAR) and native code component (UNIX packages). The Java component is a JAR file that needs to be placed into <Datameer X folder>/etc/custom-jars. See Frequently Asked Hadoop Questions#Q. How do I configure Datameer/Hadoop to use native compression? for more details.

The configuration of Hadoop compression can drastically affect Datameer X performance. See Hadoop Cluster Configuration Tips for more information.

Connecting Datameer X to Your Hadoop Cluster

By default, Datameer X isn't connected to any Hadoop cluster and operates in local mode, with all analytics and other functions performed by a local instance of Hadoop, which is useful for prototyping with small data sets, but not for high-volume testing or production. To connect Datameer X to a Hadoop cluster, change the settings in Datameer X in the Admin > Hadoop Cluster page. Click the Admin tab at the top of the page and click the Hadoop Cluster tab in the left column. Click Edit and change the Mode setting.

These values can also be configured via /conf/live.properties. See /conf/default.properties for guidance.

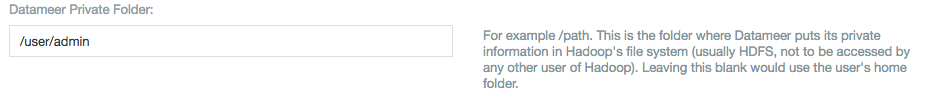

The Datameer X Private Folder must be set to the HDFS folder chosen in step 2, as seen below:

While running, Datameer X stores imported data, sample data, workbook results, logs, and other information in this area (including subfolders such as importjobs, jobjars, temp). You need to make sure no other application interferes with data in this area of HDFS.

Mandatory Hadoop settings

Hadoop settings (properties) can be configured by Datameer X in three places:

- Global settings in Datameer X are located in the under the Administration tab in Hadoop Cluster.

- Per-job properties are configured for individual import/analytics jobs

- Property files (under

/conf)

Setting the following properties ("propertyname=value") ensures that Datameer X runs properly on your Hadoop cluster:

Caution

Datameer X sets numerous Hadoop properties in the /conf folder for performance and other reasons, specifically das-job.properties. Don't change these properties without a clear understanding of what they are AND advice from Datameer X as altering them they can cause Datameer X jobs to fail.

WARNING

If any settings for the Hadoop cluster (mapred-site.xml, hdfs-site.xml, etc.) are set to FINAL, this overrides mandatory and optional Datameer X settings, and Datameer X might not work properly, or at all. Verify that FINAL is set to false for all settings configured by your Hadoop administrator or defaults set by your Hadoop distribution.

Name | Value | Description | Location |

|---|---|---|---|

mapred.map.tasks.speculative.execution | false | Datameer X currently doesn't support speculative execution. However you don't need to configure these properties cluster-wide. Datameer X disables speculative execution for every job it submits. You must ensure that these properties aren't set to 'true' cluster-wide with the final parameter also set to 'true'. This prevents a client from changing this property on a job by job basis. | das-job.properties |

mapred.job.reduce.total.mem.bytes | 0 | Datameer X turns off in memory shuffle - this could lead to an 'out of memory exception' in the reduce phase. | das-job.properties |

mapred.map.output.compression.codec | usually one of: | Datameer X cares about the what and the when of compression but not about the how. It uses the codec you have configured for your cluster. If you would like to change the codec to another available one, then set these properties in Datameer. Otherwise, Datameer X uses the default in | mapred-site.xml |

Highly Recommended Hadoop Settings

Name | Value | Description | Location |

|---|---|---|---|

mapred.map.child.java.opts | -Xmx1024M or more | By default, Datameer X is configured to work with a minimum 1024 MB heap. Based on your slot configuration, you might have significantly more memory available per JVM | mapred-site.xml |

mapred.child.java.opts | -Xmx1024M or more | By default, Datameer X is configured to work with a minimum 1024 MB heap. Based on your slot configuration, you might have significantly more memory available per JVM | mapred-site.xml |

mapred.tasktracker.dns.interface | Hadoop nodes often have multiple network interfaces (internal vs. external). Explicity choosing an interface can avoid problems. | ||

java.net.preferIPv4Stack | true | Disabling Ipv6 in Java can resolve problems and improve throughput. Set this property to ensure Ipv6 isn't used. | This can be configured in |

mapreduce.jobtracker.staging.root.dir | /user | Avoids permission problems when a user other than superuser schedules jobs. (hadoop-0.21, cdh3b3 only) | mapred-site.xml (on the cluster site - doesn't work if configured as a custom property in Datameer) |

The ipc.client.connect.max.retries=5 Hadoop parameter is hard-coded in Datameer X and can't be changed.

Highly Recommended YARN Settings

| Name | Value | Description | Location |

|---|---|---|---|

yarn.app.mapreduce.am.staging-dir | /user | The staging dir used while submitting jobs. YARN requires a staging directory for temporary files created by running jobs. By default it creates /temp/hadoop-yarn/staging with restrictive permissions that might prevent your users from running jobs. To forestal this, you should configure and create the staging directory yourself. | mapred-site.xml |

| yarn.application.classpath | CLASSPATH for YARN applications. A comma-separated list of CLASSPATH entries. When this value is empty, the following default CLASSPATH for YARN applications would be used. For Linux: $HADOOP_CONF_DIR, $HADOOP_COMMON_HOME/share/hadoop/common/*, $HADOOP_COMMON_HOME/share/hadoop/common/lib/*, $HADOOP_HDFS_HOME/share/hadoop/hdfs/*, $HADOOP_HDFS_HOME/share/hadoop/hdfs/lib/*, $HADOOP_YARN_HOME/share/hadoop/yarn/*, $HADOOP_YARN_HOME/share/hadoop/yarn/lib/* For Windows: %HADOOP_CONF_DIR%, %HADOOP_COMMON_HOME%/share/hadoop/common/*, %HADOOP_COMMON_HOME%/share/hadoop/common/lib/*, %HADOOP_HDFS_HOME%/share/hadoop/hdfs/*, %HADOOP_HDFS_HOME%/share/hadoop/hdfs/lib/*, %HADOOP_YARN_HOME%/share/hadoop/yarn/*, %HADOOP_YARN_HOME%/share/hadoop/yarn/lib/* | yarn-site.xml | |

yarn.app.mapreduce.am.env | /usr/lib/hadoop/lib/native | Custom hadoop properties needed to be set for the native library with Hadoop distributions: CDH 5.3+, HDP2.2+, APACHE-2.6+ | 1 |

Job Scheduling and Prioritization

If you have configured a job scheduling/prioritization mechanism on your cluster (such as Fair Scheduler or Capacity Scheduler), you must decide which queue or pool Datameer X should use (see the Hadoop Documentation ) for more information). To link Datameer X to your scheduling mechanism, do the following:

- Check your Hadoop configuration to see which property you should use to choose a pool for Datameer. For FairScheduler, this is

mapred.fairscheduler.poolnameproperty, which is configured inconf/mapred-site.xmlon your Hadoop cluster - Set this property globally for Datameer X at Administration > Hadoop Cluster > Custom Property (e.g.,

mapred.fairscheduler.poolnameproperty="datameerPool")

Additional Resources

- HDFS Permissions Guide: https://hadoop.apache.org/docs/stable1/hdfs_permissions_guide.html

- Security in Hadoop, Part – 1 from Blog 'Big Data and etc.' http://bigdata.wordpress.com/2010/03/22/security-in-hadoop-part-1

- Using Fair Scheduler: http://hadoop.apache.org/docs/stable1/fair_scheduler.html