...

Auto compaction ensures that appended import jobs always store data efficiently even if they run very frequently. Example: If an import job is run every hour, this generates 24 files every day. If you run it even more often there are more files of smaller size. This process could lead to a high fragmentation of your data into a lot of small files, and Hadoop is less efficient when processing these. Auto compaction compacts those files automatically on a daily or monthly basis depending on the configured schedule of an import job. This process ensures the best system performance regardless of the import job update interval. Click Pause to stop auto compaction. To restart it, click Resume.

...

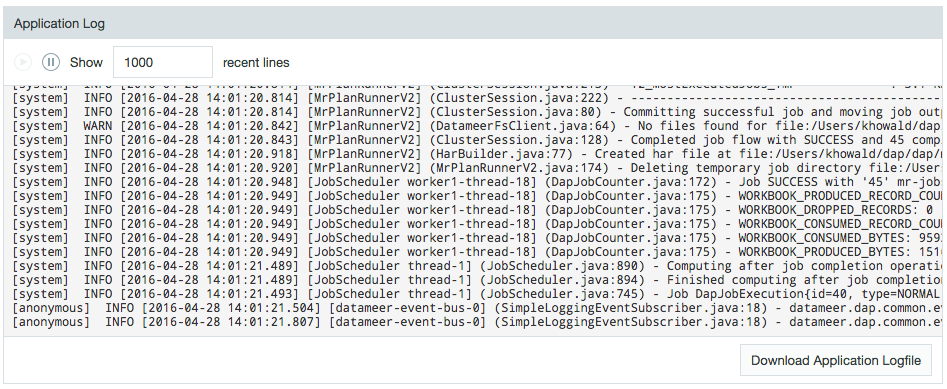

To run a job:

- Click the Admin tab on the top of the page, then click the Jobs History tab on the left.

- Click the name of the job and click Run.

The job is queued, runs, and then displays the results.

Depending on the volume of data, a job might take some time to run.

To view source details:

- Click the Admin tab on the top of the page, then click the Jobs History tab on the left.

- Click the name of the job and click Workbook Details.

- From here, you can edit the workbook, by clicking Open. See 1Working See Working with Workbooks to learn more.

- To view the complete data set, click View Latest Results. You can click the tabs to view each sheet in the workbook. You can scroll through the data, or click Go To Line to view a specific record. From here, you can click Open to edit the workbook.

From the Workbook Details page, click Details to view details about the job.

Note Smart Execution jobs, marked as YARN Applications in the manager, are listed as successful even if a problem leads to the Datameer X job failing.

...