...

| Note |

|---|

Obfuscation is available with Datameer's Advanced Governance module. |

| Table of Contents |

|---|

Applicable Custom Properties

| Custom Property | Description | Default Value |

|---|---|---|

| das.conductor.default.record.sample.size |

| 1,000 |

Creating an Import Job

To create an import job:

...

Import job right-click in the

...

Browser and

...

select "Create New → Import Job".

Connection Tab

Click on "Select Connection", select the connection and confirm with "Select".

Select the file type and click "Next".

Data Details Tab

The fields on the 'Data Details' tab depend on the type of the file, however, there are several fields in common. In

...

the 'Encryption

...

' section, enter all columns to obfuscated with a space between the names.

Note that when a column is obfuscated, that data is never pulled into Datameer.

...

See the following sections for additional details about importing each of the file types:

- Apache log: specify the file or folder and the log format. See the samples provided in the dialog box for details.

- CSV/TSV files:

- Specify the delimiter such as"\t" for tab, comma ",", or semicolon ";".

- Specify if column headers should be made from the first non-ignored row.

- Specify the escape character to "escape" processing that character and just show it in Advanced Settings.

- Specify the quote character in advanced settings. If Enable strict quoting is selected, characters outside the quotes are ignored.

- Fixed width: specify the file or folder and specify if any of the first lines in the data should be skipped and then if column headers should be made from the first non ignored row.

- JSON: specify the file or folder and other parameters about what to parse within the JSON structure.

- Mbox: specify the file or folder. This is a format used for collections of electronic mail messages.

- Parquet: columnar storage format available in Hadoop.

- Regex Parsable Text files: specify the file or folder, a Regex pattern for processing the data (see note below), and specify if any of the first lines in the data should be skipped and then if column headers should be made from the first non ignored row.

- Twitter data: specify the file or folder.

XML data: specify the file or folder, the root element, container element, and XPath expressions for the fields you would

Note title Custom Headers For all types, you can:

- Specify that the column headers are in a separate file by selecting Provide custom schema. Indicate where the schema is located and the delimiter characters. The first row of that file is assumed to be column headers.

- Specify a number of lines to be ignored on import from the source data, including the header file using Ignore first n lines, under Advanced.

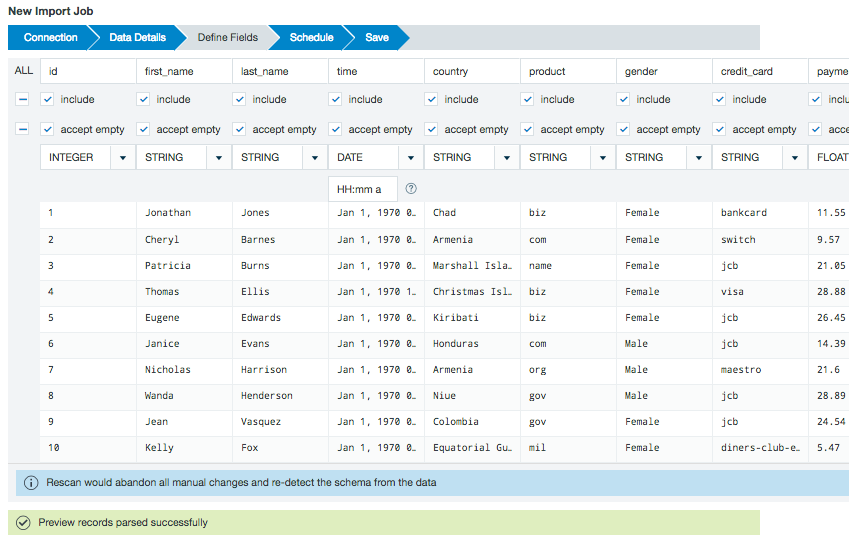

Define Fields Tab

View a sample of the data set to confirm this is the data source you want to use. Use the checkboxes to select which fields to import into Datameer. The accept empty checkbox allows you to specify if NULL and empty values are used or dropped upon import. Verify or select a data type for each column from the drop down menu. You can specify the format for date type fields. Click the question mark icon in the data format field to see a complete list of supported date pattern formats.

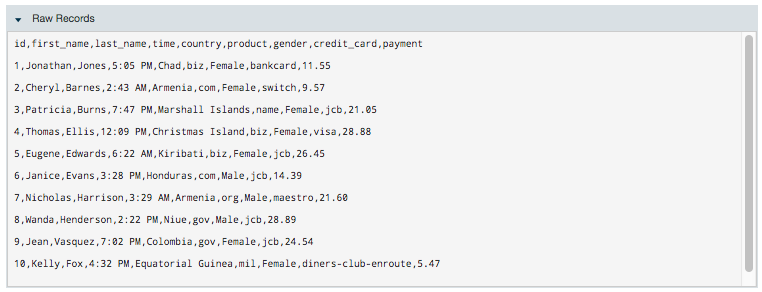

The Raw Records section shows how your data is viewed by Datameer X before the import.

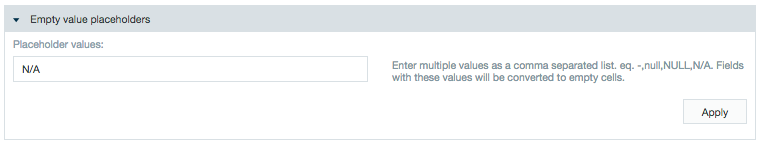

The Empty value placeholders section is a feature giving you the ability to assign specific values as /wiki/spaces/DASSB70/pages/33036123891. Values added here aren't imported into Datameer.

The How to handle invalid data? section lets you decide how to proceed if part of a /wiki/spaces/DASSB70/pages/33036123891 doesn't fit with the defined schema during import.

Selecting the option to drop the record removes the entire record from the import job. The option to abort the job stops the import job when an invalid record is detected.

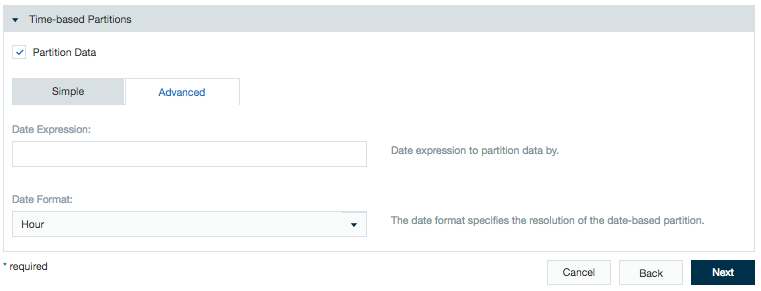

You can partition your data using date parameters. When this data is loaded into a workbook, you can choose to run your calculations on all or on just a part of your data. Also if you decide to export data, you can choose to export all or just a part of your data.

Click Next.

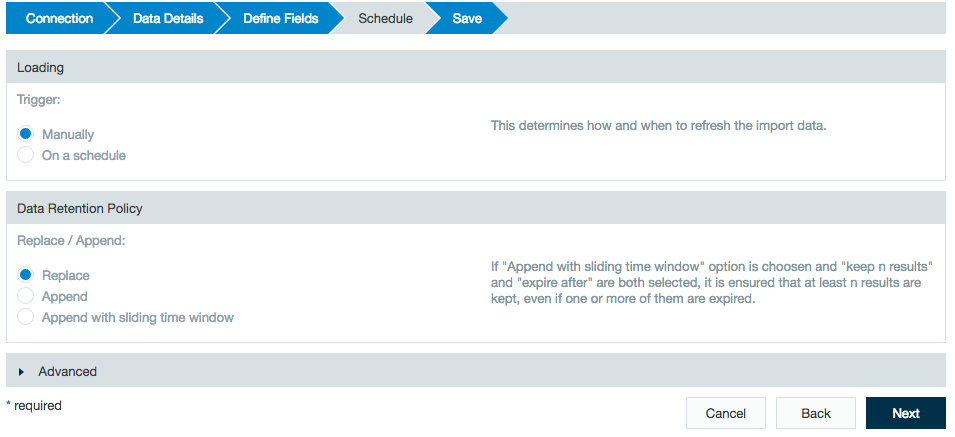

Schedule Tab

Define the schedule details.Anchor schedule_details schedule_details

In the Loading section, select Manually to rerun the import job in order to update or On a schedule to run the import job update at a specified time.

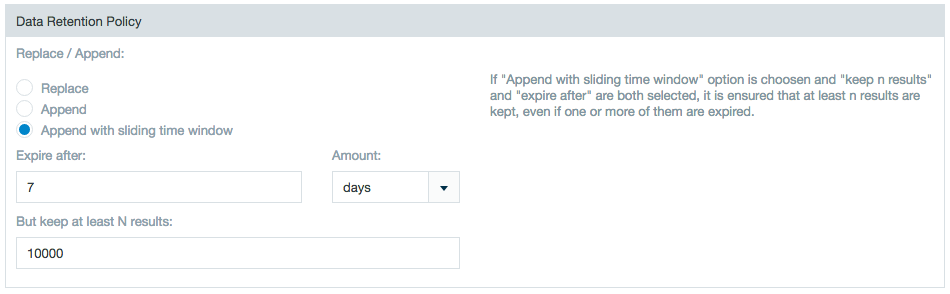

In the Data Retention Policy section choose whether to replace new updated data or to append it to existing data when updating an import job. You also have the option to choose Append with sliding time window to define a range during which the update expires and how many results to keep.Anchor data_retention data_retention Anchor columnchange columnchange Note title Adding or deleting columns from an appended import job The appended import jobs can contain additional or fewer columns than the previously run import job. When the schema is rescanned, Datameer X notices that columns have changed so no data is lost.

However, data can be lost due to append changes in these circumstances:

- If a column name has been renamed then the schema uses the column with the new name and deletes data from the old column name.

- If the data type of a column has been changed

- If a change in the partition schema is made the data resets starting from the new change

Save Tab

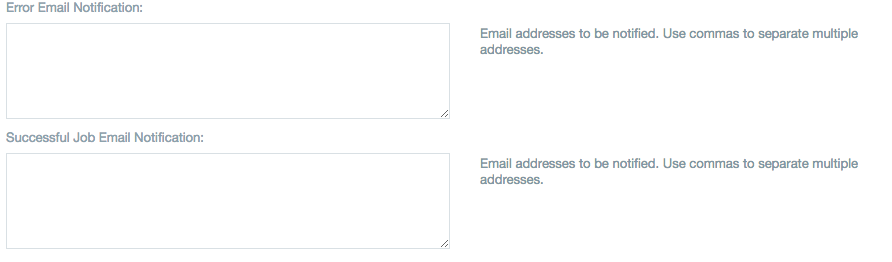

Add a description, name the file, click the checkbox to start the import immediately if desired, and click Save. You can also specify notification emails to be sent for any error messages and when a job has completed successfully. Use a comma to separate multiple email addresses. The maximum character count in these fields is 255.

| Note |

|---|

Note: Sample Regex pattern for importing data: (\S+) (\S+) (\S+) (\S+) (\S+) See Importing with Regular Expressions to learn more. |

Type Conversions

- Integer columns can be imported as date by interpreting the integer value as UNIX timestamp or epoch timestamp.

- Date columns can be converted as integer, the converted columns are shown as an epoch timestamp.

- Strings can be converted to Boolean, where "false", "no", "f", "n" and "0" are converted to false and "true", "yes", "t", "y" and "1" are converted to true.

...